Measurements of Latency for Intel VMX Root — Non-root Transitions

Recently I’ve become interested in measuring whether Intel’s virtualization performance is improving as µarchitecture generations pass by. One source of overhead is transitions between root and non-root modes. There is some data collected by VMware [1], but the papers lacks information on CPUs produced after 2011. Besides, I found no detailed description of their measurement methods. I decided to do some experiments myself.

Sources

I adapted some code found on Github to my needs. You can find my stuff here.

The code is based on the vmlaunch by Vish Mohan. Take a look at the original post for details on what and why it does. I changed the code so that VMRESUME/VMEXIT are done repeatedly in cycles to compensate for random deviations.

It is a Linux kernel driver that sets up a VMCS structure and then jumps into the non-root mode. There is does a single thing: VM-exit immediately.

WARNING: the kernel module is completely unstable, it may crash your system at random. Do not use. I told you.

Userland

An auxiliary userland script is used to perform some nasty things, namely turning off CPU frequency scaling (Intel SpeedStep through Linux governor), and disabling all but one logical processors (Linux hot-plug capability). The bad thing is, the script does not re-enable them after an experiment is over. It is rather trivial to turn everything back on, but I was too lazy to clean up after myself. Besides, I recommend to reboot a computer after my driver has been unloaded in any case. This way it is safer.

Compiling

The cmd script is meant to build the .ko file in the same directory. Adjust it to point to your kernel tree.

I tried it on Ubuntu 12.04 and Ubuntu 14.04, 64-bit flavors. The driver builds and works, but it fails to unload on the more recent Ubuntu variant — crashes with a page fault in kernel. Being not a great kernel hacker, I do not why it does it yet. I am to compile this code on OpenSuse yet, as I have some troubles with kernel sources being organized differently than they are in Ubuntu. Or, I’ll just boot from a live system, run my experiments, and run away.

Results

The exp folder contains actual experimental data I managed to collect so far. I am looking for systems with different variants of Intel VT-x. My ultimate plan is to collect data for as many different Intel processors as possible, including these µ-archs:

- Netburst of Pentium 4 (Models 662 and 672) — a rare thing to get by these days.

- Penryn

- Nehalem

- Westmere

- Sandy Bridge (done)

- Ivy Bridge

- Haswell

- Broadwell

Also, some Intel Atoms are capable of VT-x too; wish I can lay my hands on them, as stuff I own cannot do VT-x magic.

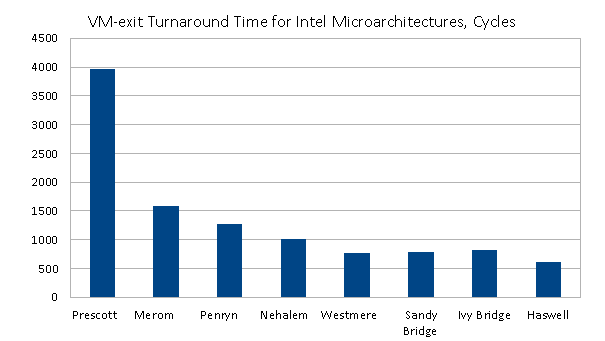

So far, here is the comparison of VM-exit + VMRESUME turnaround latency for different Intel IA-32 microarchitectures. The data is combined from [1] along with my measurements, which align pretty well at the intersecting part of data.

References

[1] Poon W.-C., Mok A. Improving the Latency of VMExit Forwarding in Recursive Virtualization for the x86 Architecture // 45th Hawaii International Conference on System Science (HICSS). — 2012. — Pp. 5604—5612. — DOI: 10.1109/HICSS.2012.320.