Tests on Venn diagram

This is very likely not a new idea. I most probably have seen it somewhere but have since forgotten where. Now I feel as it was my own idea. I wanted to share it.

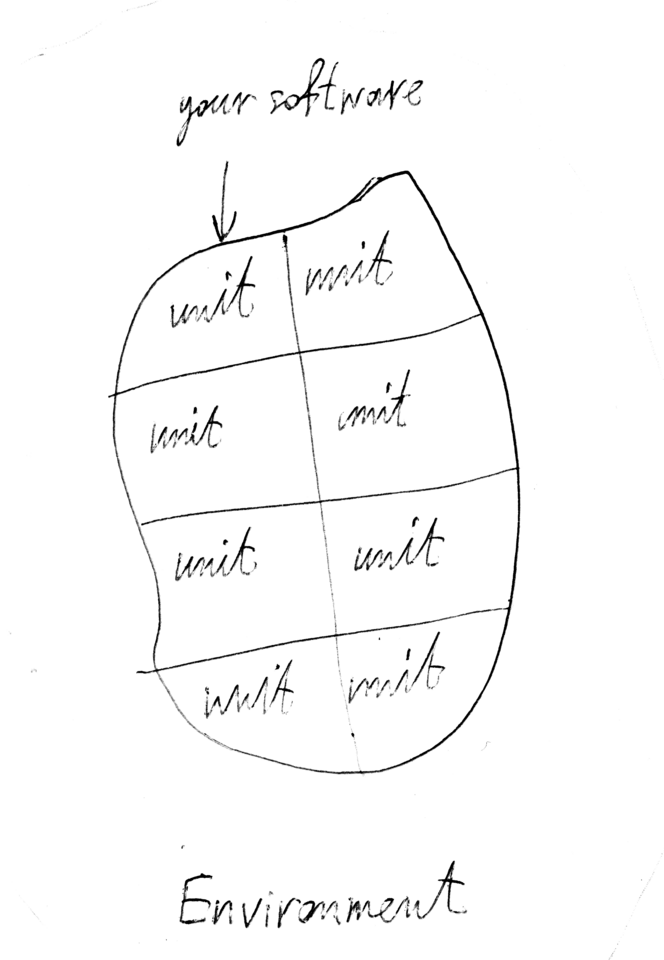

Any non-trivial software application has a structure with many units of behavior interacting with each other:

There may be smaller units within units, but that fact does not change the further discussion. We won’t be discussing what a unit is either. What is important is that you can test each of them in isolation, by mocking/stubbing out dependencies from other units and outside world. The idea is that individual units have few dependencies.

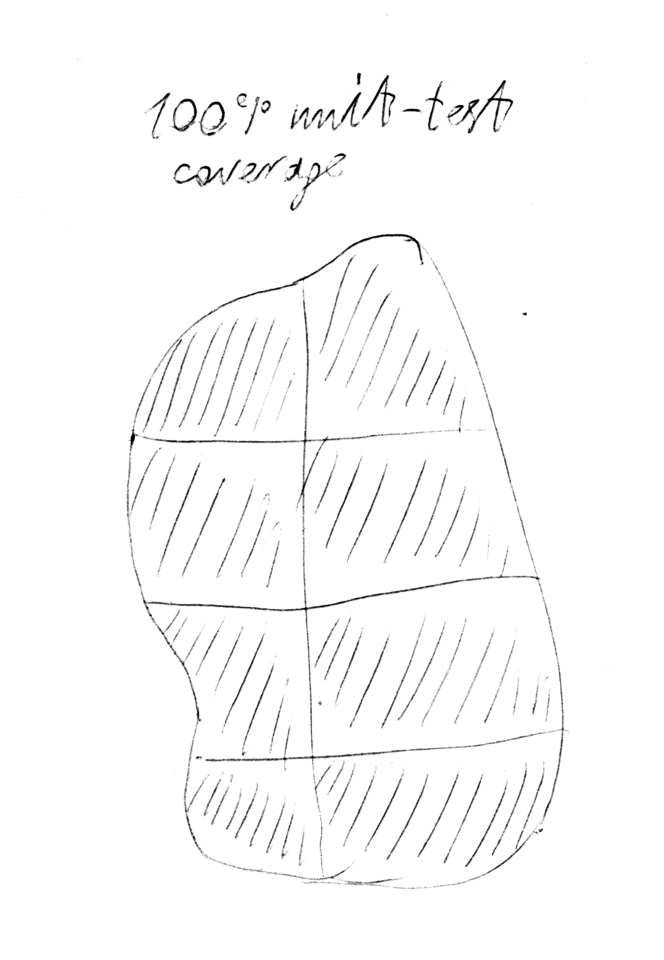

With tests, you can achieve certain test coverage. It can be measured differently (percentage of code lines, expressions, branch conditions etc. reached). Bigger coverage means that less of the unit’s behavior is untested. It also means higher confidence about this unit’s quality (i.e. absence of bugs and adherence to specifications). A 100% coverage for a unit means that no additional test could generate a different outcome that its existing suite of tests does not already have. Whether or not it is easy or reasonable to strive for 100% unit test coverage is also out of the scope of discussion here.

Let’s assume we have 100% test coverage of all individual units:

Is is enough to say that out application is fully tested? No. This is a situation when a sum of components is not equal to the whole thing.

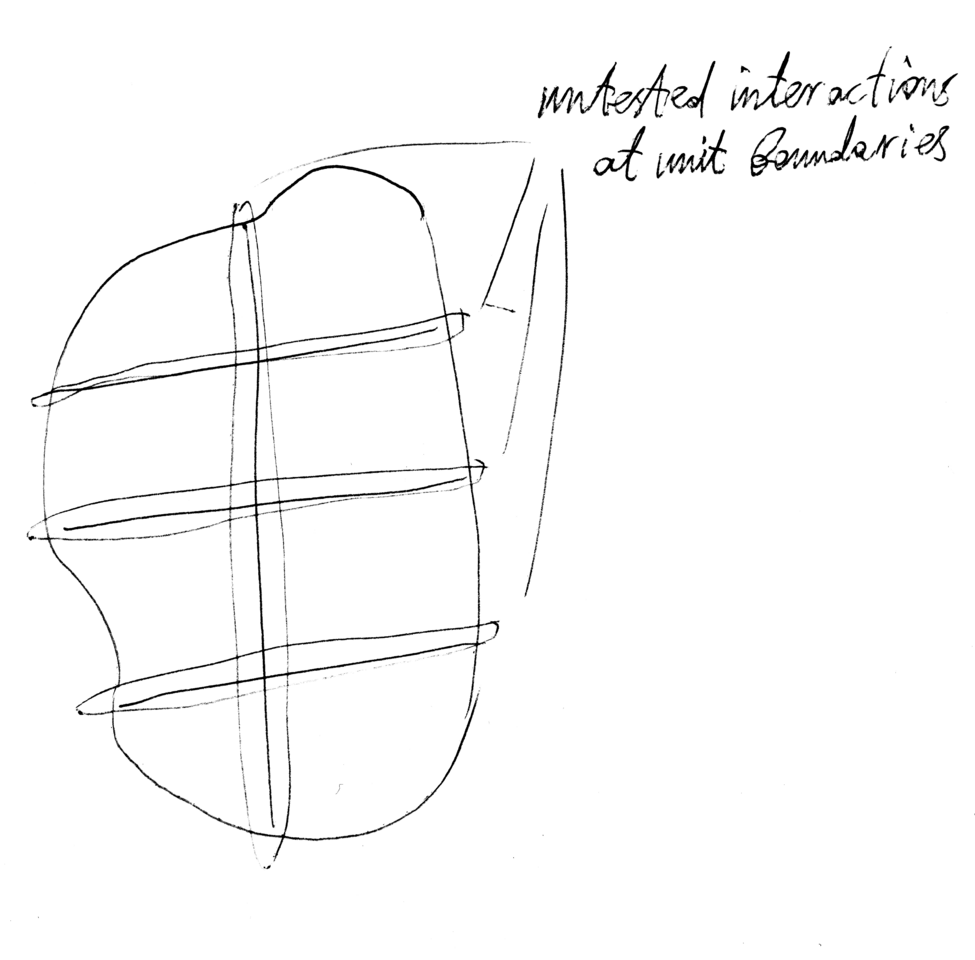

What the unit tests fail to reach are the boundaries between individual units, and interactions between units that cross these boundaries:

Boundaries should have no own behavior to speak of (otherwise they’d be units). APIs, ABIs, class hierarchies, data type layouts and other types of constraints are boundaries.

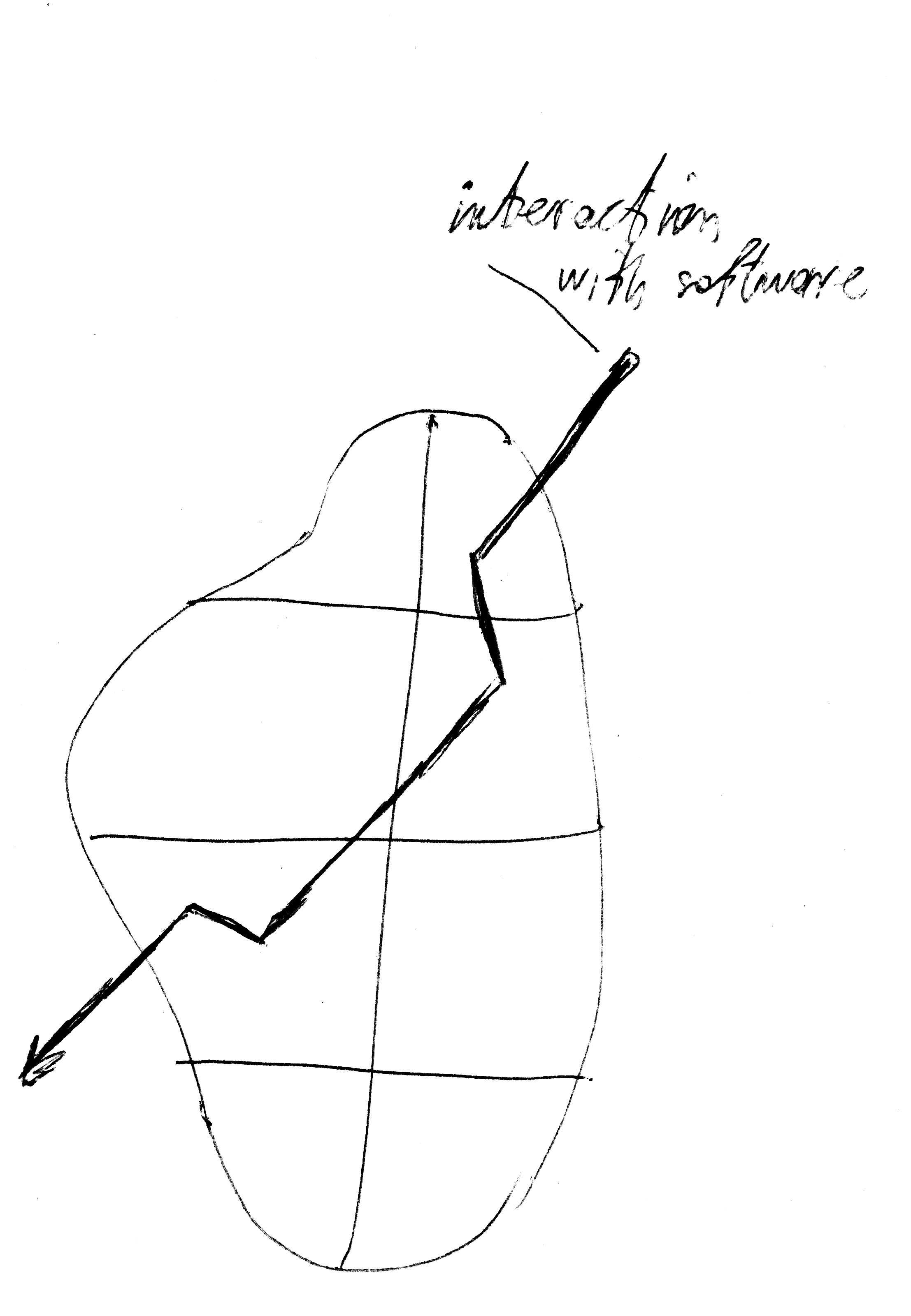

One more observation is that a typical interaction of any software with the external world (transaction, request processing, even handling etc.) consists of behaviors arising from inside the units as well as on the boundaries, both internal and with the external environment:

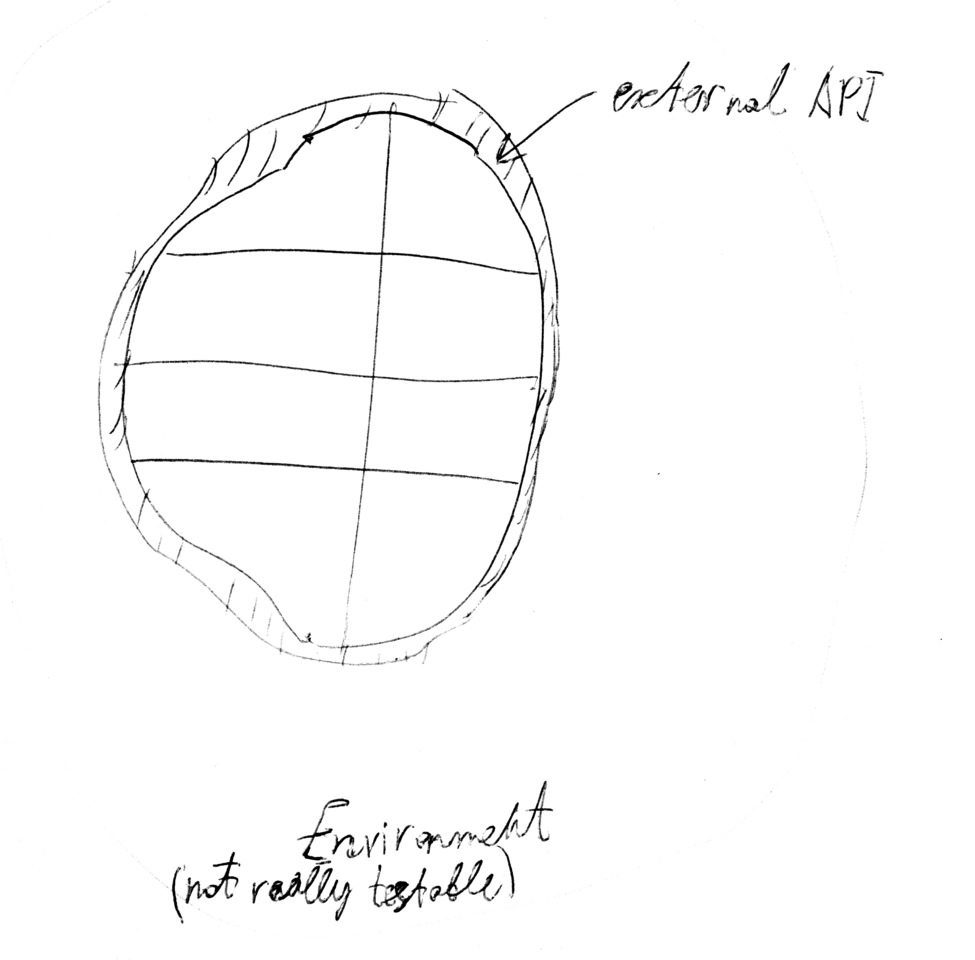

And speaking of the environment, it is actually very hard to check/guarantee that it does what you and your application supposes it should do. While it is possible to (at least partially) test the environment inside which your application is currently executed, it is outside the scope of this discussion. For now, we can only trust that the environment does not misbehave.

What to do with behaviors across the boundaries? Test them too! Such tests are meant to exercise one or more units and boundaries integrated together. They are no longer should be called unit tests, but rather are integration tests. Ideally, a single integration test case should exercise at most one interaction with the external world. It may do even less than that, i.e., to test an interaction between two units that do not touch the outside world, by mocking the necessary environment.

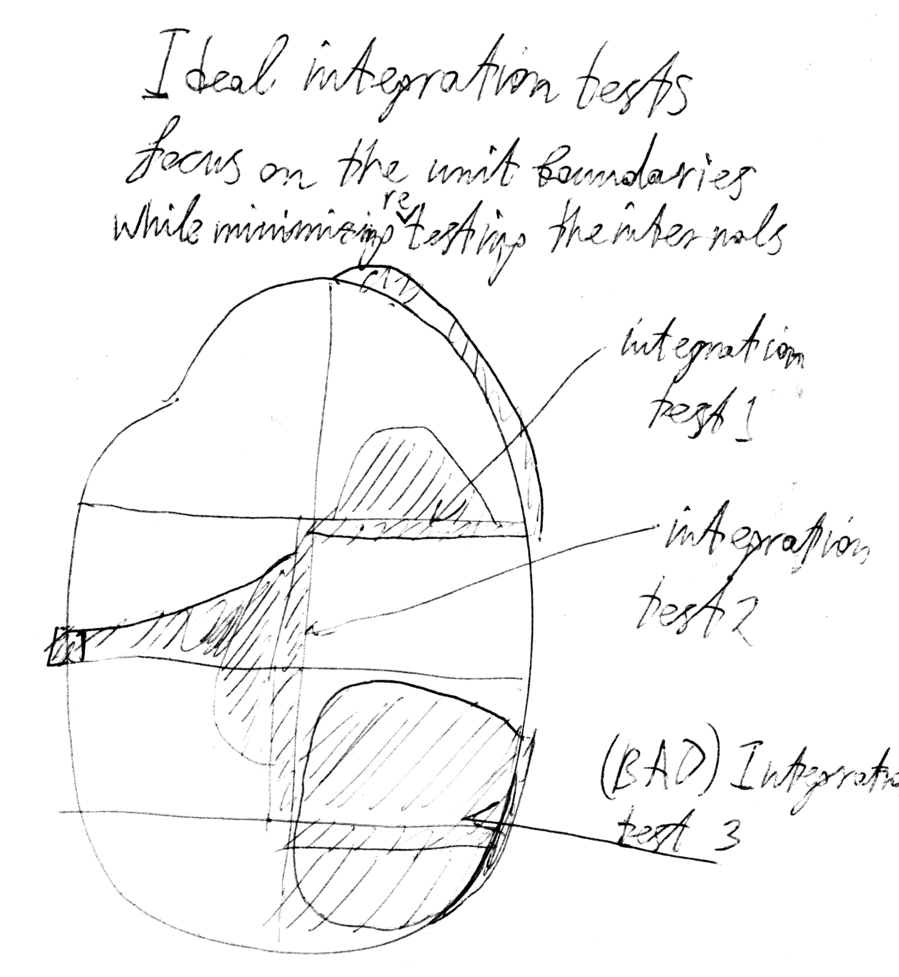

To make an integration test least redundant, it should strive to cover as much of a chosen boundary but as little of the units across that boundary. With the assumption that we already have 100% unit test coverage, any behavior inside units touched by the integration test have already been observed by existing unit tests. Observing it, and by that, depending on it, only contributes to slower execution and higher fragility of such integration test.

In the next picture, test 1 and test 2 are following this rule and are mostly focused on the boundaries and invoke limited amount of behavior from the connected units, while test 3 touches both the boundary and most of two units using it.

Of course, it is not realistic to avoid testing at least something inside the units. Remember that boundaries have no behavior on their own. To “exercise” them, some sort of behavior should come from the surrounding units or mocks. The point is to keep each type of tests focused on separate goals.

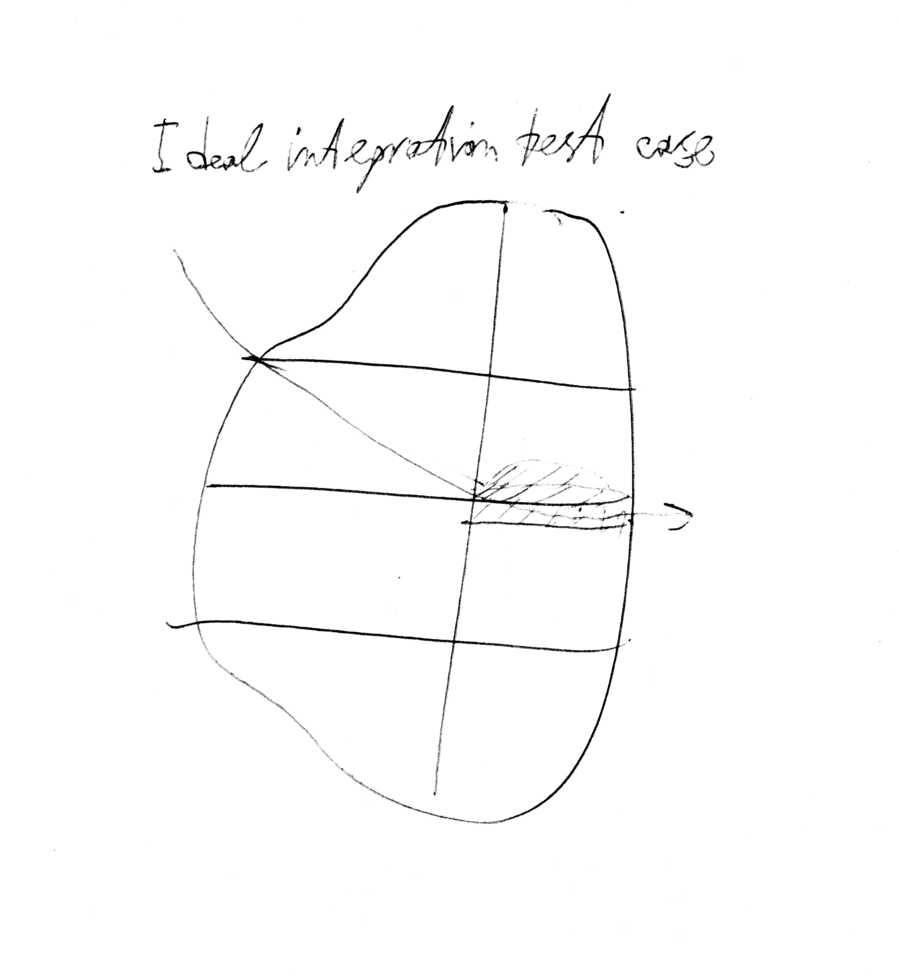

On the picture below, an integration test case touches very little of units but a lot of the boundary between them. That should be an ideal case for it.

Why are integration tests often (much) slower than unit tests? It is because of the wasteful duplication of test coverage that they provide. For the same reason, they are likely to be more fragile: depending on more things inevitably causes reacting when those things change.

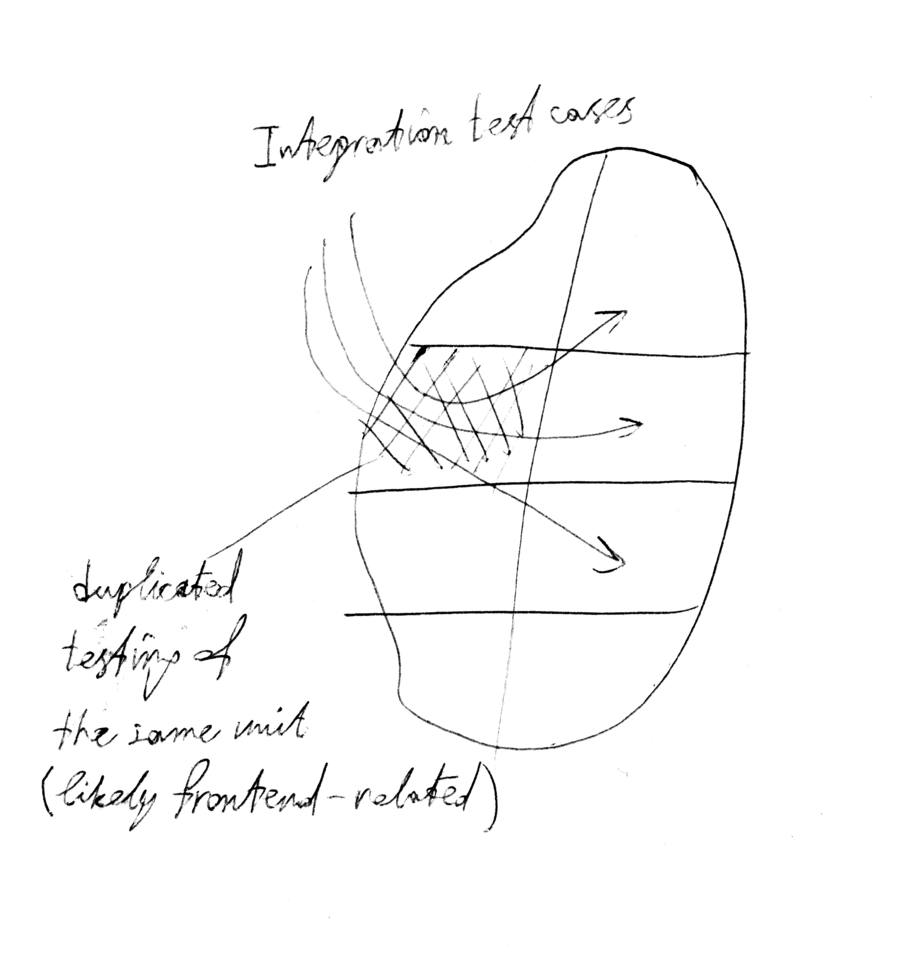

Consider the following example, which is quite common in reality. An application has a frontend unit. It can be GUI, interpreter, authorisation gate etc. All integration test scenarios written for the application are required to pass this frontend unit before they can reach additional units and interact with them:

As result, the frontend unit is “redundantly covered”. Or rather, exercising it more than once contributes nothing to its test coverage and by such, it does not increase confidence about its quality. At the same time, the time spent by all integration tests passing through the frontend is wasted.

Summary

- Unit tests can witness about quality of units.

- Unit tests do not reach across the boundaries and cannot provide feedback about them.

- Integration tests should strive to target boundaries and not units across them.

- Using integration tests instead of unit tests to cover units is counterproductive. It leads to slower and more fragile tests.